Computer-Aided Detection Leveraging Machine Learning and Augmented Reality

Technology Roadmap Sections and Deliverables

- 2AIAR - Computer-Aided Detection Leveraging Machine Learning and Augmented Reality

Roadmap Overview

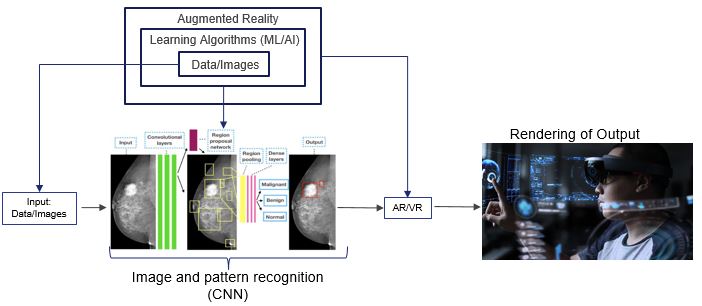

The working principle and architecture of CAD Leveraging Machine Learning and Augmented Reality is depicted in the below.

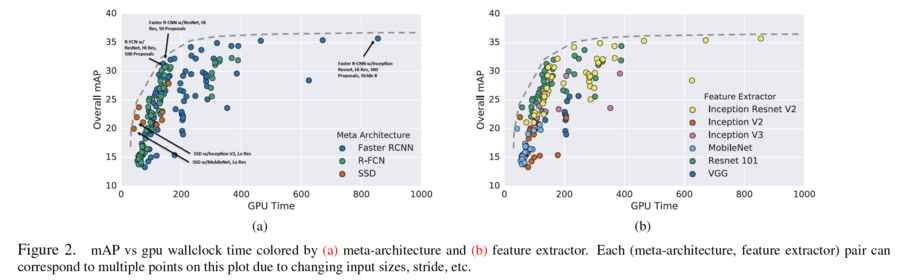

This technology uses Computer Aided Detection (CAD) with Faster R-CNN deep learning model, leveraging Augmented Reality (AR) for a unique 3D rendering experience. This experience increases the accuracy of interpretation and therefore, proper actions for problem solving, versus what is available today, which is the simple CAD using high resolution image processing. Faster R-CNN9 is based on a convolutional neural network with additional components for detecting, localizing and classifying objects in an image. Faster R-CNN has a branch of convolutional layers, called Region Proposal Network (RPN), on top of the last convolutional layer of the original network, which is trained to detect and localize objects on the image, regardless of the class of the object. The differentiation of this model is how it optimizes both the object detection and classifier part of the model at the same time.

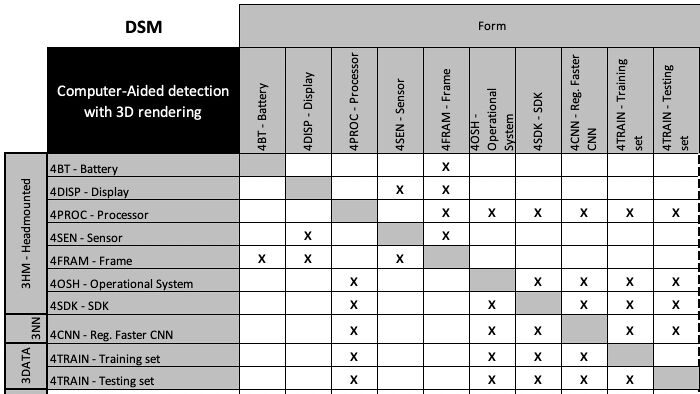

Design Structure Matrix (DSM) Allocation

The 2-AIAR tree that we can extract from the DSM above shows us that the AI Model for AR Rendering has the objective of bringing two emerging technologies to complement each other and delivery disruptive value. Both integrate to DETECT and RENDER images or interactive content using subsystem levels:

3HM head-mounted components, 3NN Neural Network algorithms, and 3DATA. In turn these require enabling technologies at level 4, the technology component level: 4CNN as the level of layer used for the neural network algorithm, 4TRAIN which is the data set to train the model and 4TEST for testing it.

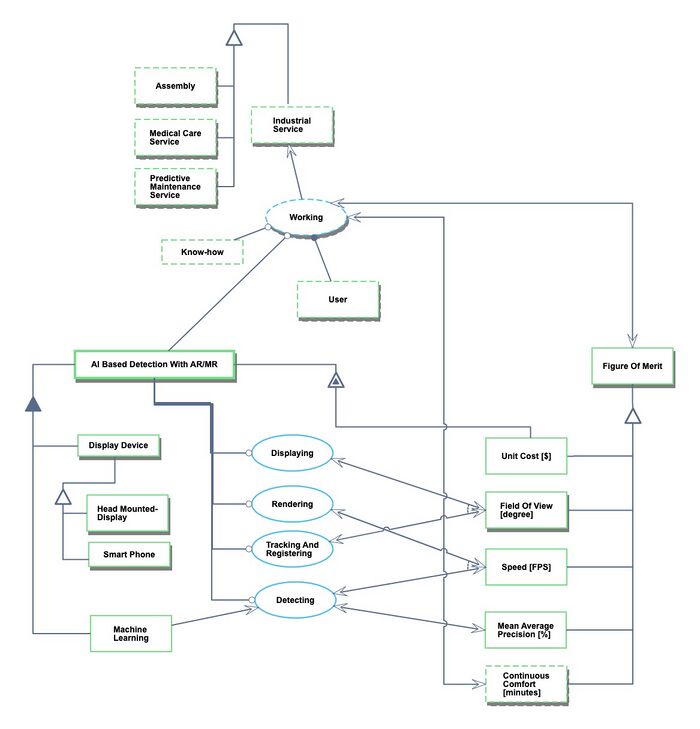

Roadmap Model using OPM

We provide an Object-Process-Diagram (OPD) of the 2CADAR roadmap in the figure below. This diagram captures the main object of the roadmap (AI and AR), its decomposition into subsystems (i.e. display devices such as head-mounted and smartphones, algorithms and models), its characterization by Figures of Merit (FOMs) as well as the main processes (Detecting, Rendering).

An Object-Process-Language (OPL) description of the roadmap scope is auto-generated and given below. It reflects the same content as the previous figure, but in a formal natural language.

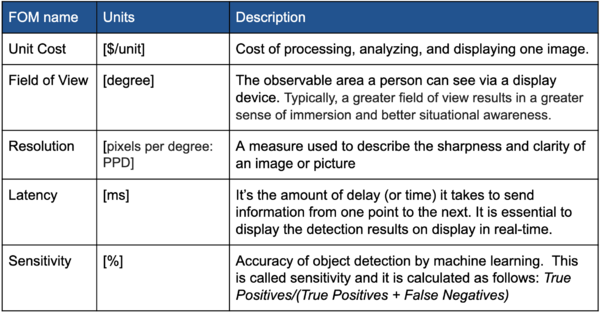

Figures of Merit

The table below shows a list of FOMs by which CAD with Machine Learning and AR can be assessed. The first FOM applies to the integrated solution of CAD with AI and AR. That cost per unit represents the combined utility of both functions: detecting (ML/AI) and rendering (AR). The other two FOMs are specifically for the Augmented Reality: Field of View and Resolution. The last two FOMs are for the Machine Learning model: Sensitivity (related to accuracy) and Latency.

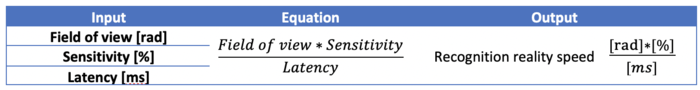

The table below contain an FOM to track over time, as it uses the three of the primary FOMs that are the underlined of the technology. The purpose behind is to track accuracy, speed, and field of view, which is important for the overall user experience.

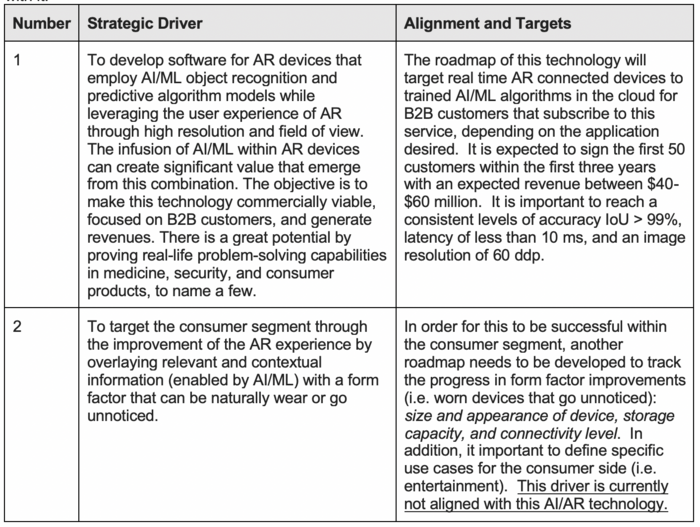

Alignment with “Company” Strategic Drivers: FOM Targets

The table below shows an example of potential strategic drivers and alignment of the Computer-Aided Detection Devices Leveraging Machine Learning and Augmented Reality technology roadmap with it.

The list of drivers shows that the company views integrated AR/AI software as a potential new business and wants to develop it as a commercially viable (for profit) business. The roadmap focuses on the B2B segment, not consumers. In order to do so, the technology roadmap performs some analysis - using the governing equations in the previous section - and formulates a set of FOM targets that state that IoU needs to achieve an accuracy in object detection of ~99% (although it also depends on the industrial application, lower accuracy can be accepted), a very high resolution of 60dps, and a latency of 10ms.

The roadmap confirms that it is aligned with this driver. This means that the analysis, technology targets, and R&D projects contained in the roadmap support the strategic ambition stated by driver 1. The second driver, however, which is to use this AI/AR technology for consumers or domestic applications, is not currently aligned with the roadmap. Our hypothesis is that for the consumer, the evolution of the form factor for the AR device plays a key role and another roadmap needs to be created.

Positioning of Company vs. Competition: FOM charts

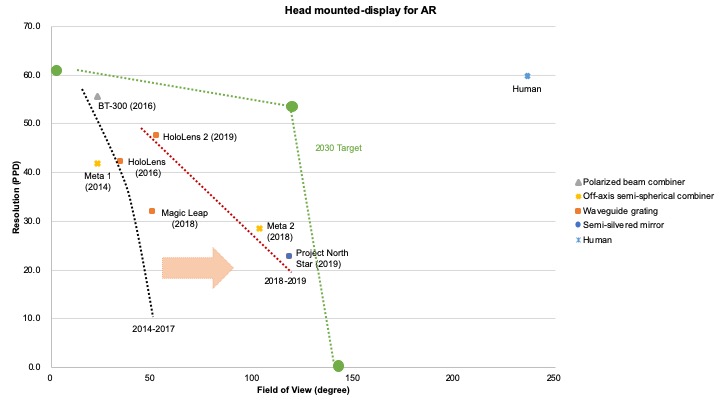

The figure below shows a summary of the holographic AR device, Head-mounted displays and smart glass, that are intended for performing AR applications from public data.

In recent years, wearable devices that can execute AR / MR applications other than smartphones have been developed. The demand is increasing in the industrial use or entertainment fields since, for example, both hands can be used freely. However, there might be less successful products in business today.

These products can be categorized into smart glasses and head-mounted holographic AR device.

- Head-mounted display: Hololens, Hololens 2, Magic Leap One, Meta 2, and Project North Star

- Smart glass: Google Glass and Epson BT-300

Among these products, HoloLens 2 and Magic Leap one might be ahead. For using AI, the GPU performance of Holens 2 seems to be excellent. Microsoft has applied a unique architecture that has been developed by Microsoft to optimize for the better AR / MR experience.

The chart shows that the wearable devices are put on by the type of combiners. Currently, the waveguide grating technique is paid attention to improving FoV. The technique is applied to HoloLens and Magic Leap One. The Pareto Front, that is shown in black (2014-2017) and red (2019-2019) in the lower-left corner of the graph, shows the best tradeoff between Field of View and Resolution for actually achieved. The transition from the black line to the red line can indicate the trend of product improvement: As a common feature of each product, there might be a tendency to widen the Field of View without reducing the resolution. Based on the patent research, we predict the technology improvement as shown in the green line. Human spatial cognitive limits will also become a limitation in devices.

Technical Model: Morphological Matrix and Tradespace

Defining the use case was the starting point to define the Forms and Functions needed to evaluate our FOM and sensitivities. Our use case is to identify a criminal in a crowded street. One policeman is walking in a crowded street using a glass. That glass has sensors that scan people's faces. The person image is processed by a machine learning program. This program compares the image and identifies the person ID (name, address, gender, criminal records). If the person has any pending criminal record issue. The glass will project a red box in the lens. This red box indicates this person should be arrested.

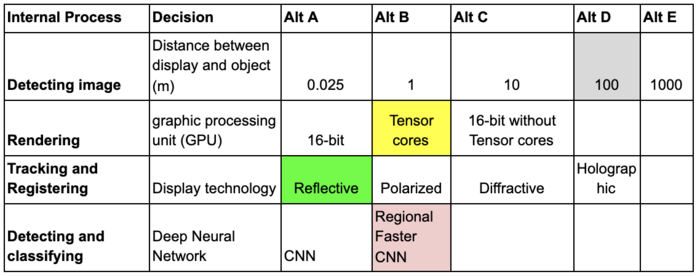

The Morphological Matrix - Table 1 - is the Level 1 Architecture decisions to define our concept. Then we can choose the FOM levels, list of parameters and sensitivity.

The first and most important architecture decision is the distance between the image and the lens. It has an impact on the hardware and software specifications. We defined the maximum distance should be 100 meters. The other architecture decisions are colored in yellow, green and pink to facilitate the visualization.

Graphics Processing Unit - GPU - performance that supports Augmented Reality: FOM: Flops.

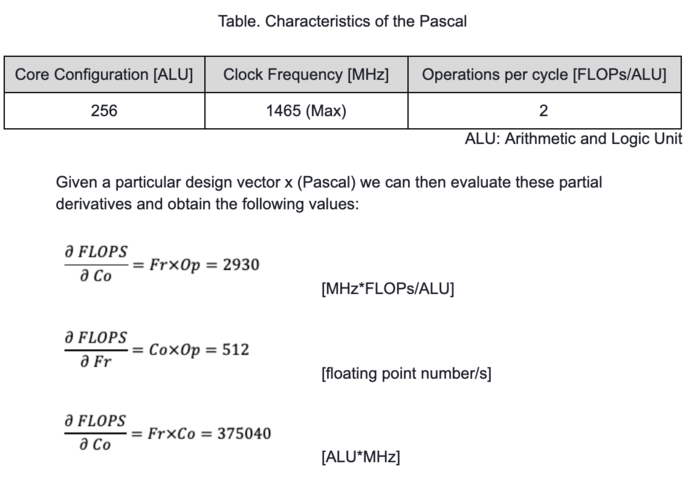

For AR applications, many elements are required to work in tandem to deliver a great experience. The features include cameras, vision processing, graphics, and so forth, which leads to needing more GPU to compute abilities that can run the Convolutional Neural Networks that will power the vision processing systems used by AR applications. In 2019, the choice of a GPU has been more confusing than ever: 16-bit computing, Tensor Cores, 16-bit GPUs without Tensor Cores - See Morphological Matrix - multiple generations of GPUs which are still viable (Turning, Volta, Maxwell). But still, FLOPs (Floating Point Operations Per Second) is reliable performance indicators that people can use as a rule of thumb. Therefore, we view FLOPs as a Figure of Merit for AR. Certainly, it contributes to the performance of AI as well. FLOPs equation is here:

FLOPS= Co∗Fr∗Op

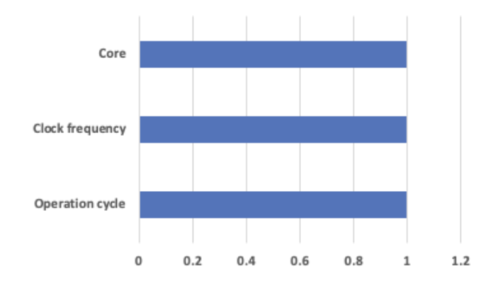

Therefore the normalized tornado plot:

Keys Publications and Patents

Publications:

1. P. Milgram and A.F. Kishino.

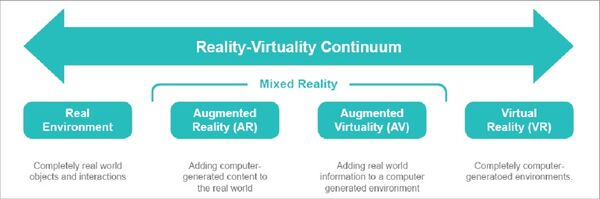

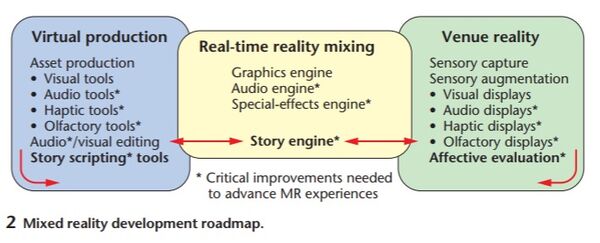

Historically, the first concept of Mixed Reality (MR) was proposed by Milgram et al. (1994). Mixed reality (MR) experience is one where a user enters the following interactive space:

- Real world with virtual asset augmentation (AR)

- Virtual world with augmented virtuality (AV)

P. Milgram and A.F. Kishino. (1994). Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Information and Systems, vol. E77-D, no. 12, pp. 1321-1329.

2. Huges

Hughes (2005) gave shape the concept of MR. MR content is the mixed visual and audio one for both AR and AV.

- AR: image, sound, smell, heat. The left side of the figure below

- AV: virtual world overlaid with information of real-world obtained with such as camera and sensors. The right side of the chart below, which is captured, rendered and mixed by graphics and audio engines.

D.E.Hughes. (2005). Defining an Audio Pipeline for Mixed Reality, Proceedings of Human Computer Interfaces International, Lawrence Erlbaum Assoc., Las Vegas.

3. Adriana et al.

Adriana et al. (2009) sophisticates the definition of Mixed Reality (MR): MR is the merging of real and virtual worlds to produce new environments and visualizations where physical and digital objects co-exist and interact in real-time. This paper removes the somewhat confusing concept of Augmented Virtuality (Virtual world overlaid with information of real-world obtained with such as camera and sensors) from MR and added real space instead (meaning has not changed). This paper also emphasizes spatiality, interactivity, and real-time nature.

Silva, A. de S. e., & Sutko, D. M. (2009). Digital cityscapes: merging digital and urban playspaces. New York: Peter Lang.

Currently, methods to realize AR technology could be categorized as location-based AR and vision-based AR.

- Location-based AR: it presents information using location information that can be acquired from GPS and so forth.

- Vision-based AR: With technology such as image analysis and spatial recognition, Vision-based AR shows information by recognizing and analyzing a specific environment. Vision-based AR is further divided into two types: marker type AR and markerless type AR.

We think about applying AI into Vision-based AR with markerless type. The following papers gave us insights into design space about a combination of AI and AR.

4. Huang et al.

This paper aims to serve as a guide for selecting a detection architecture that achieves the right speed/memory/accuracy balance for a given application and platform. To this end, the authors investigate various ways to trade accuracy for speed and memory usage in modern convolutional object detection systems. Some successful systems have been proposed in recent years. Still, apples-to-apples comparisons are difficult due to different base feature extractors (e.g., VGG, Residual Networks), different default image resolutions, as well as various hardware and software platforms. This paper presents a unified implementation of Faster R-CNN, R-FCN, and SSD systems, which is viewed as “meta-architectures.” It traces out the speed/accuracy trade-off curve created by using alternative feature extractors and varying other critical parameters such as image size within each of these meta-architectures.

Huang, J., Rathod, V., Sun, C., Zhu, M., Korattikara, A., Fathi, A., and Murphy, K. (2017). Speed/Accuracy Trade-Offs for Modern Convolutional Object Detectors. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi: 10.1109/cvpr.2017.351.

5. Liu et al.

This paper provides the perspectives of object detection by AI in AR applications. Most existing AR and MR systems can understand the 3D geometry of the surroundings but cannot detect and classify complex objects in the real world. Convolutional Neural Networks (CNN) enables such capabilities, but it remains difficult to execute large networks on mobile devices. Offloading object detection to the edge or cloud is also very challenging due to the stringent requirements on high detection accuracy and low end-to-end latency. The long latency of existing offloading techniques can significantly reduce the detection accuracy due to changes in the user’s view. To address the problem, the authors design a system that enables high accuracy object detection for the commodity AR/MR system running at 60fps. The system employs low latency offloading techniques, decouples the rendering pipeline from the offloading pipeline, and uses a fast object tracking method to maintain detection accuracy. The result shows that the system can improve the detection accuracy by 20.2%-34.8% for the object detection and human keypoint detection tasks, and only requires 2.24ms latency for object tracking on the AR device. Thus, the system leaves more time and computational resources to render virtual elements for the next frame and enables higher quality AR/MR experiences.

Liu, L. Li, H. and Gruteser, M. (2019). Edge assisted real-time object detection for mobile augmented reality, MobiCom, ACM.

Patents:

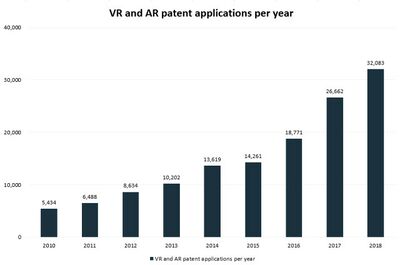

iam-media.com analyzed 140,756 patents. The figure given below illustrates the number of patent applications between 2010 and 2018. Patent filings have more than doubled in the past four years. The data shows that an increasing number of companies are applying for patent protection.

(Source: iam-media.com)

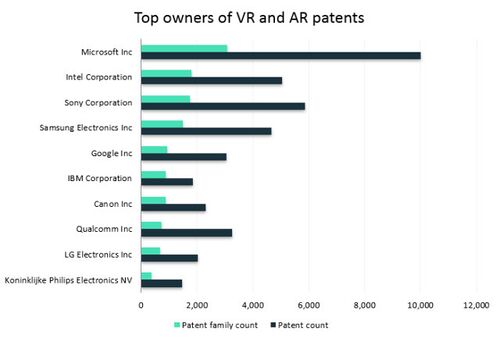

The figure shows that Microsoft, Intel, and Sony are the three most active VR and AR-related patent owners. In this section, we mention the Microsoft patents that are related to the field of view.

(Source: iam-media.com)

1. WAVEGUIDES WITH EXTENDED FIELD OF VIEW

Using embodiments described herein, a large FOV of at least 70 degrees, and potentially up to 90 degrees or even larger can be achieved by an optical waveguide that utilizes intermediate-components to provide pupil expansion, even where the intermediate-components individually can only support of FOV of about 35 degrees. Additionally, where only a portion of the total FOV is guided to disparate intermediate-components, a power savings of up to 50% can be achieved when compared to a situation where the FOV is not split by the input-coupler.

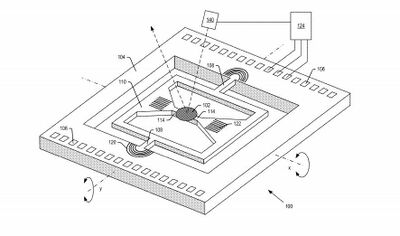

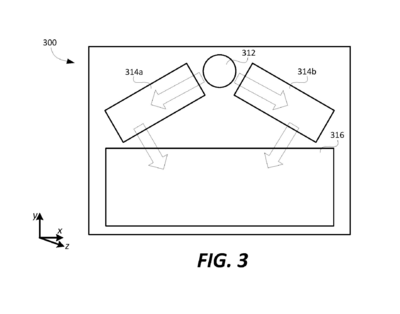

2. MEMS LASER SCANNER HAVING ENLARGED FOV

Like current augmented reality headsets with liquid crystal on silicon (LCoS) or digital light processing (DLP) display engines, a MEMS laser scanner projects images by reflecting light onto gratings on a display. While the FoV of a MEMS laser scanner display is usually only 35 degrees, the patent application calls for the display to generate light in two different directions, with the projected images potentially overlapping in the middle. As a result, the field of view could approach 70 degrees, according to the patent summary.